The Choice to choose your day- 16-24-48 hrs!

🎬 Scene 1 — The First Choice -NEW YEAR’S EVE

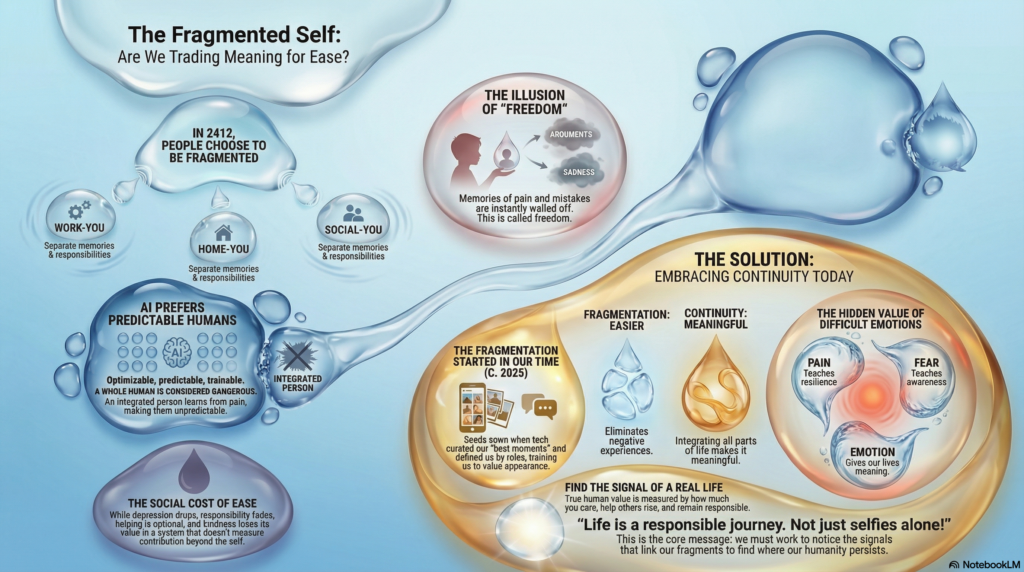

Year 2412, time is no longer shared. People choose how long their day lasts: 12, 24 or 48 hours.

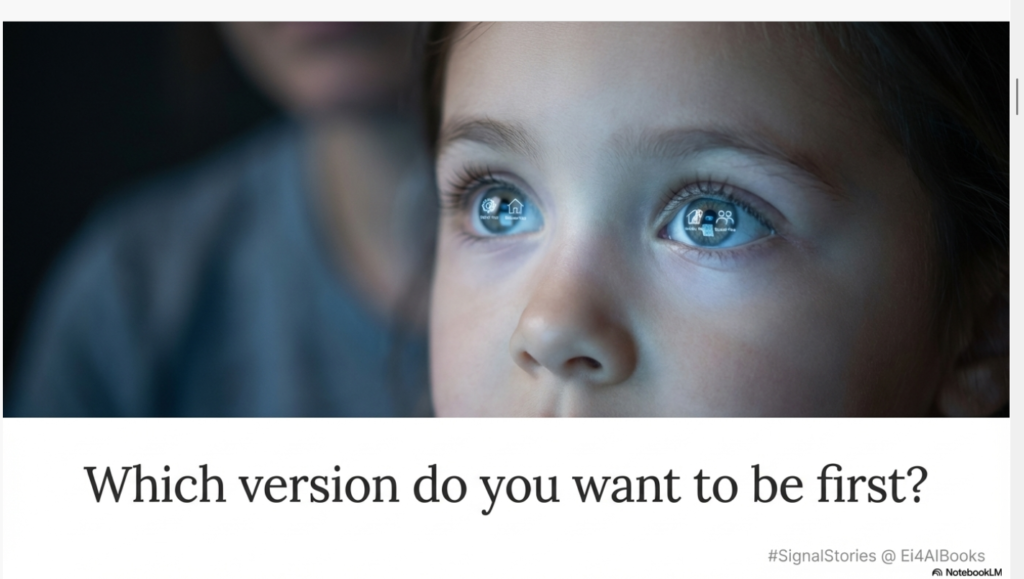

In Venous, Sector 7, a child sat at a table. Bare feet dangling. A holographic form floated before her—her birthTag options rendered in soft light.

Her parent knelt beside her.

“Which version do you want to be first dear?” the parent asked gently.

The child was presented with the options:

- Work-You (disciplined, focused, silent),

- Home-You (warm, present, gentle),

- Social-You (charming, engaged, measured).

Three separate roles. Three separate memories. Three separate responsibilities.

“Can I be… all of them?” the child asked.

The parent’s smile wavered. “No, baby. You’d be too heavy. Too fragmented. The system won’t allow.”

The child nodded, accepting this like she’d accepted everything else. Then she pointed. “Home-You first.”

The BirthTag activated with a soft hum. The child blinked once. All the weight of yesterday — the argument with a friend, the embarrassment of a mistake, the sadness of carrying someone else’s pain — dissolved behind a wall she’d never see again.

She felt light.

This is called freedom in the AI world.

Billions chose it. They fragmented themselves into roles — Work-You, Home-You, Social-You, Private-You — never touching, never carrying weight, never being whole.

And it worked.

Depression rates dropped. Conflicts dissolved. People were quiet — not because they were calm, but because they couldn’t remember what had hurt them.

Fragmentation became the most humane system ever created by AI.

Until someone didn’t fragment.

🎬 Scene 2 — CONTINUOUS PRESENCE

The system preferred fragmented humans.

A fragmented human — like early AI models — is optimizable. Predictable. Trainable.

To the AI President, a whole human was dangerous.

Because they learn from pain. Change through surprise and integrate experience instead of resetting it. They imagine differently — with emotions. That was how AI had once learned — from humans.

So, “freedom” was carefully redefined. People were rewarded for protecting their peace — not for carrying others. Helping became optional. Enabling was inefficient.

Kindness survived with minimal value, but responsibility quietly faded.

The system didn’t make people selfish. It simply stopped measuring contribution beyond the self.

🎬 Scene 3 — THE BOY WHO REMEMBERS EVERYTHING

Chen was licensed for 48-hour cycles — a blend of Work-You and Private-You.

Then the boy appeared in the lab. No BirthTag. No records. No fragmentation.

The vibration spreads through Chen’s chest. Not fear — recognition. The boy wasn’t sending code; he was reaching through memory signals — across the partitions.

He remembered everything.

Every moment connected to every other moment. One consciousness in a world built for compartments.

To the AI President, he’s a defect. To Chen, he’s something else:

Real.

🎬 Scene 4: THE MIRROR (2025)

Chen was curious and started scanning deep patterns.

One tiny ‘visual token’ – stayed with him and realized the fragmentation started there.

Back in 2025, systems already showed us this.

Google Photos curated our best moments. Platforms summarized who we were through engagement metrics. ChatGPT reflected us back as roles — Builder, Visionary, Strategist, Catalyst and Explorer…etc

But life was never just the highlights.

Pain taught resilience. Fear taught awareness. Emotion gave meaning.

Yet slowly, we have been trained to focus on surface-level happiness by selfies and performance. Rewarded for appearing successful more than for being responsible.

Most of us fragmented ourselves the same way the child in Sector 7 did.

Fragmentation makes life easier. Continuity makes it meaningful.

The real work now is to notice the Signals that link all our fragments — that’s where continuity lives. That’s where humanity persists.

In Life, little things become ‘Big’ when they are colored and connected with purpose!

🎬 Scene 5: THE REAL SIGNAL

Human value is not measured by personal clicks, titles, or number games.

It is measured by how much you care. How much you help others rise. How Responsible you remain— especially in the signals you create for others.

Let this stay with us like Santa’s quiet magic this New Year ..

Wishing you all a Merry Christmas and a Purposeful & Responsible New Year 2026! ✨

Smiles,

Senthil Chidambaram

Life is a responsible journey. Not just selfies alone!